HP DL360 DL380 Gen8 Review

I don't usually talk about the day job, here in my personal blog but recently, I have been building a number of HP Gen8 servers and the engineering on them is just so nice that it is worthy of a mention. We will start with the name, "Gen8". The first DL servers were simply called DL360 (the 1U model) and DL380 (2U) when released by Compaq (prior to the merger with HP). When they updated the design to a new generation, they called it the DL360 G2. Over the years, we have had G3, G4, G5, G6 and G7. However, it turns out that "G8", which would be pronounced "gee-ate" is Manderin slang for "penis", so the marketing folk broke with tradition and called it "Gen8"

HP have had a tool-less server design for quite a number of years now. It is rare to delve so deeply into the server that you need to undo a torx screw, but should the need arise, the tool is provided clipped into the back of every server: (nb: pictures taken with iphone)

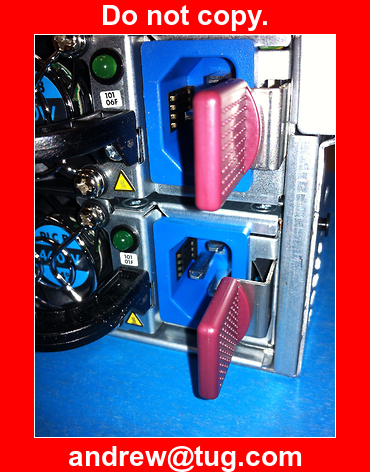

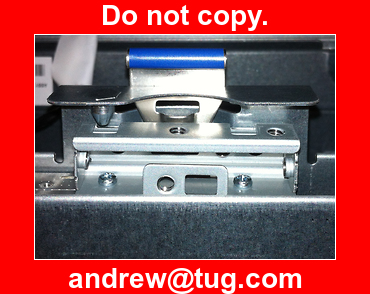

HP have brought out new racks to go with the Gen8 servers. No longer is the rack just a dumb piece of metal. There are connections on the rail at the front so that the rack can interrogate the servers and find out what is installed. The new PDU strips are also intelligent, the power cables had a data connection, so that the rack and power supplies can talk to each other. Here we see the power connectors on a DL380 Gen 8, where the data connections are clearly visible inside the socket:

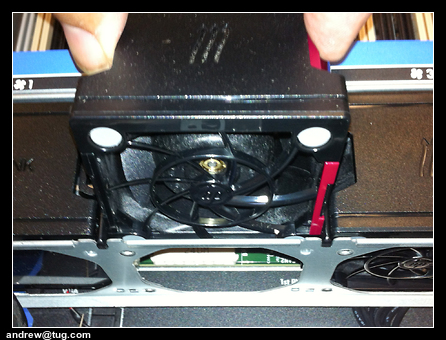

When you add more processors to a server, you need more cooling, so the processor pack comes with additional fans. Fitting them couldn't be easier - simply pull out the blank and drop the new cube into place. Just like Lego.

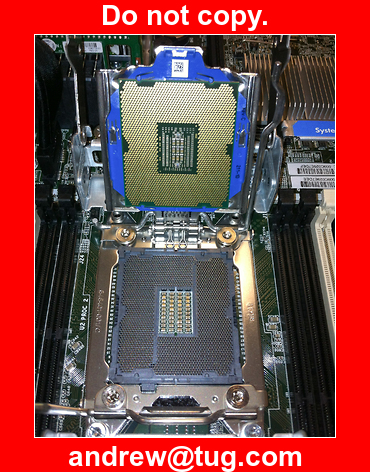

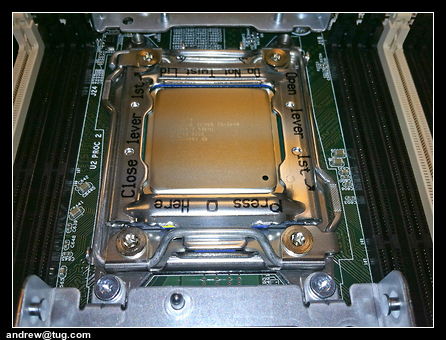

Previously, fitting processors has always been stressful. Sure, they had a cage to help you and there were notches to make sure that you couldn't fit it upside down but it was tricky to get the alignment spot on and one slip would result in hundreds of bent pins. The new design makes it a doddle. First pull up on the blue handle to remove the plastic blank and protective cover:

Then slide in the processor in it's blue plastic sleeve. Unlike previous cages, which held the bare chip, it fits precisely with no uncertanty:

Next, lock in place with the levers in the order indicated:

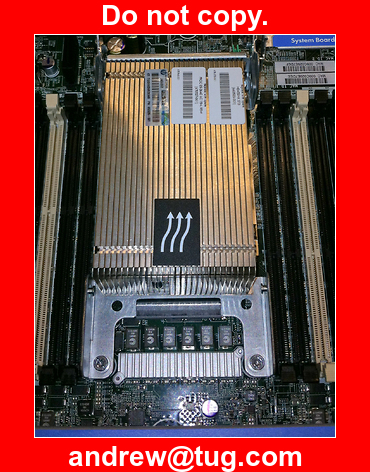

The heatsink (pre-fitted with the right amount of heat paste) is keyed to only fit the correct way:

And lock it all in place by bringing the handle back down again.

When described in words, it doesn't sound very different to the previous designs but the new Gen8 engineering simply does it better. Everything fits sweet as a nut.

Next, we slot the SD card boot media into it's space on the motherboard. It might look like an ordinary SD card but it is a special "Single Level", high reliability card, costing a healthy £45 for 4GB. The card is easy enough to fit but it is packaged in a particularly nasty sealed plastic container ready for retail display which is exceedingly difficult to open.

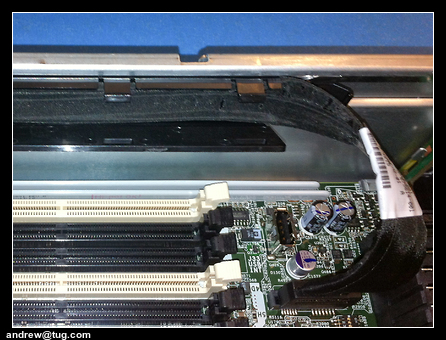

Memory is fitted in the usual way. Read the instructions on the underside of the lid of the server to find out where it goes, rip open the plastic case and fit it in the slot. HP have helpfully used white slots to identify which ones are used first. The plastic packaging is a whole heap easier to open than the SD card - you can do it by hand, but if you are kitting out a datacentre and opening hundreds in a day, you will be wishing that HP had used an easier to open tamper-evident container. Room for improvement here.

HP have been sneaky with their memory cards. For the fastest memory, they authenticate that it is genuine HP memory. Third part memory may be capable of the same speeds but if it isn't authenticated as HP, then the server will run it slower anyway. Shame on HP.

HP response: SmartMemory

HP have been in touch with me (the lead engineer no less!) to discuss this. They point out that in the Gen8 servers, they are now supporting faster memory than Intel support! The authentication piece restricts this enhanced functionality to HP's own memory, whilst enabling third party memory to run up to the regular limit imposed by Intel. I guess it makes sense that if you are going to provide full support for an over-clocked configuration, you need to know exactly what you are dealing with.

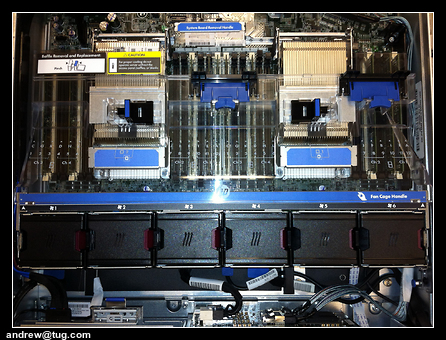

The memory and processors are fitted under a clear plastic air baffle that clips securely in place:

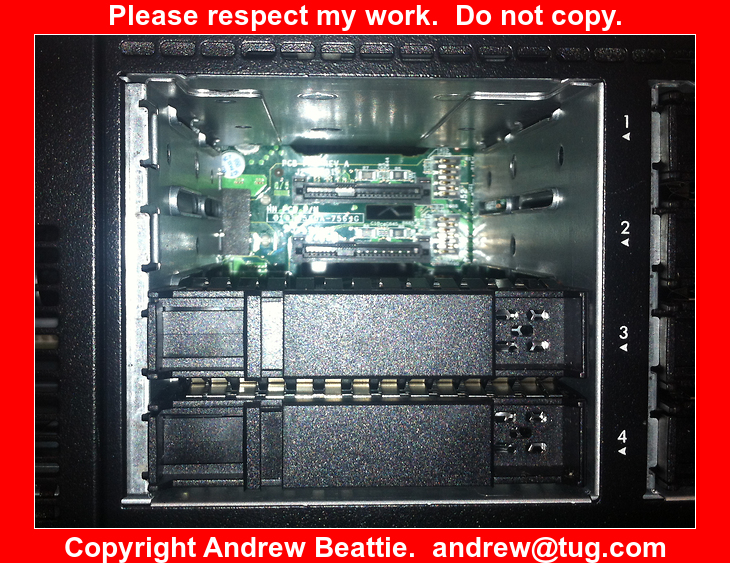

The first time I tried to fit a DVD drive, I was puzzled. By default, there is a blank in the slot and I searched in vain for a button to press or a lever to pull to remove it. I Googled for the technique but found nothing. In the end, I levered it out with a screwdriver:

It turns out that this is indeed the way to remove the blank. There is nothing fundamentally wrong with this but in the context of all the other excellent engineering it just seemed unlikely.

The DVD drive fits into the bay with a satisfying "clunk" and can by released by pushing down on the blue lever:

Even the DVD cable is thought through. The power and data come together in a flat package and fit neatly into a channel clip provided for precisely this purpose:

When you are ready to put it in the rack, HP have got this so sorted. The rails clip securely into place without resorting to captive nuts. Not only that but they do so in just about any rack you might pick - not just HP's own racks. You pull out the rail, the drop the server into place. Rear end first, where the position of the rails is most precisely aligned - small heads on the side of the chassis simply drop into slots in the rail. Then you lay the server down, making sure that the remaining 3 heads on each side drop easily into their respective slots. Press a blue button on each side and simply slide the server into place, as easy as closing your sock drawer. Beautiful and fuss free.

It is a fabulous piece of engineering. Everything is just right, everything is easy. The "out of the box" experience brings to datacentre servers the same sort of feeling that you get when you open the box of an Apple product - a feeling of appreciation for the engineering, experience and attention to detail that has gone into every aspect of the design

Until the end. When you realise that to save a few pennies, HP has omitted two drive blanks. If, like many people, you are booting from SAN or from SD or by PXE across the network, then you may not be fitting any hard drives into the server. By default, you are left with a gaping hole in the front:

Which leaves me feeling deflated. It is not unreasonable to buy a HP server with no additional components, using PXE boot, the default memory and the 4 x 1Gb NICs that are on the motherboard but in this configuration the server is not of merchantable quality, HP would recommend that the server not be used with drive blanks missing.

Such a shame. Outstanding engineering and design let down by some excessive penny-pinching.

HP response: Disk Blanks

HP have been in touch with me to defend this too. Their spin is that the focus is on waste, not cost. It seems that the world is slow to catch up with the virtualised reality that you don't need boot drives in servers any more and almost everyone else is still buying a couple of drives with every server. It turns out that I am the first to complain about this and they are taking it seriously. It is already fixed for factory-configured servers. They are looking at the configuration program that is used to design servers to see if that can be adjusted to specify the blanks when needed. They are fixing the spec sheet to make the situation clear. They are fixing the website which lists the blanks because the picture was wrong. I'm impressed.

Conclusion

It is one thing to advise other people how to spend their money but the real proof is in how you spend your own money. As far as servers are concerned, that just happened. We need to upgrade the server that Tug.com is hosted on (or more strictly, we need to upgrade the physical server that Ourshack.com runs, where Tug.com is virtualised). This is paid for by a bunch of chums chipping in £10 per month to cover bandwidth and hardware costs - a meagre budget. And we need a server that will last many years. Despite working in the industry, I don't get a special price because the discounts are wrapped up with specific customers - my customers can buy these cheaper than I can! We bought a DL360 Gen8, and fitted it with 2x900GB drives and 36GB of HP memory. For Ourshack, the clincher was the drives. The Gen8 caddy is marginally smaller than the pervious ones, which enables HP to squeeze up to 8 of them into the 1U form factor. Thus at present densities, we can fit 8x 900GB high performance, high reliability SAS drives in the server and would expect to enjoy higher capacities during the life of the machine. We have lots of room to add more memory and we have a spare processor slot if we need it. I would expect that our investment in a Gen8 server will see us good to 2020 and beyond.